More pixels, better pixels. There is no slowing the need for higher and higher quality imaging. As hardware progresses there is pressure to boost the quality of content even further toward photoreal fidelity. Technology is advancing so rapidly that the creative freedom of a format agnostic, resolution independent future is within reach.

“The move to higher resolution imaging has been a continuing theme in the media industry for many years and will remain a key battleground for competition,” says Peter Sykes, Strategic Technology Development Manager, Sony PSE. “Parameters include dynamic range, frame rate, colour gamut and bit depth. Creative teams will select the best combination to achieve their objective for the content they are producing.”

From HD to 8K to 32K to ‘infinity K’, the demand for more resolution in visual content remains insatiable. Avid has coined the term ‘(n)K resolution’. “We’ve gone from SD to HD, to UHD, and now we’re at 8K. That trend is going to continue,” says Shailendra Mathur, Avid’s VP of Architecture

Download the “Reshaping Creativity” white paper.

“Photorealism is driving this trend but not everyone wants photorealism,” says Chuck Meyer, Technology Fellow, Grass Valley. “Cinematographers might want to add back film grain for the filmic look. High frame rate movies are criticized for looking like TV. Yet in home theatre people want content to be immersive, higher resolution and they don’t like blocking. So, we have different perceptions and different needs for what technology has to provide.”

Take HDR. A broadcaster might need content in 1080p HLG for home viewing for newer home TVs, a version in PQ for mobile devices and another in SDR for legacy screens.

“Three renditions, one media content, each monetized,” says Meyer. “Media organizations don’t want to be bound to any parameter. They want to enable the parameters they feel they can monetize.”

Epic Games’ Unreal Engine 4 can already achieve very high quality visuals, but the demand for even greater fidelity drove development of its Nanite geometry system, coming in 2021 as part of UE5.

“Nanite will enable content creators to burst through the limits imposed by traditional rendering technologies and enable the use of complex, highly detailed assets in their real-time projects,” says Marc Petit, General Manager for Unreal Engine at Epic Games. “This technology will be paired with Lumen, our real time photorealistic rendering solution, for an unprecedented level of photorealism in real-time graphics.”

UK producer and postproduction company Hangman Films regularly captures music videos at 8K with Red cameras and recently in 12K with the Blackmagic Design URSA Mini Pro.

“Greater resolution gives you greater flexibility in post but even if you shoot 12K and downsample to 4K your image will look better,” says Hangman director James Tonkin. “If you shoot 4K for 4K you have nowhere else to go. We don’t want a resolution or format war. It’s about better pixels and smarter use of pixels.”

Futureproof the Archive

Film negative remains the gold standard. Studios are mining their back catalogue to resurrect classics shot decades ago – like The Wizard of Oz (1939) restored by Burbank’s Warner Bros. Motion Picture Imaging – for 4K HDR reissues.

But digital formats suffer badly over time.

“Music videos I shot on MiniDV look appalling now and you can’t make them any better than what they were shot on,” says Tonkin. “I’ve just been grading a project captured at 4K Log and I know the client wants to push the image more but I can’t pull anything more from it. The technology is not allowing you to express the full creative intent.

“But we’re getting to a point where what we shoot now will last for another 15+ years, maybe longer. If you want any longevity then shoot, finish and master at the highest format possible at the time otherwise it will add more cost down the line when you need to revisit it.”

Content producers have long seen creative and business benefits in acquiring at the highest possible image format, regardless of the actual current deliverable. 8K is now being promoted to studios as the best way to futureproof content.

“Now for the first time we are able to capture at a resolution that is considerably higher than 35mm,” said Samsung’s head of cinematic innovation Des Carey who rated 35mm at 5.6K equivalent. “Remastering from 35mm is time consuming, costly and can add artefacts and scratches. With 8K data it is easier to get back to what you initially shot. With 8K data we also have option to conform at 2K, 4K or 8K and, as AI upscaling becomes more robust, we will see Hollywood blockbusters shooting at higher resolutions.”

Games Engine Rendering

Using games engines, the same content can be rendered at different resolutions depending on the context. Both the source video content and the graphics can be represented in a 3D space, and final quality pixels – in 2K, 4K or 8K – can be rendered in real-time.

“UE generates the video feed on demand at a prescribed resolution and frame rate,” explains Petit. “Multiple systems running Unreal can be combined with Epic’s nDisplay technology to render to screens or LED walls of any shape and/or resolution, planar or not.

“In broadcast, the same 3D content generated in Unreal can be used for HD or 4K production,” he says. “For live events, a graphics package created for a concert that includes multiple live camera feeds for a stage backdrop, can be rendered to different display configurations depending on the venue, whether a 4K video wall for a small venue, or a more complex setup with projectors for a stadium. It is the same content that is rendered to a number of video feeds; each can be a different resolution.

“That same content can also be experienced in VR for previsualization, camera blocking or location scouting purposes.”

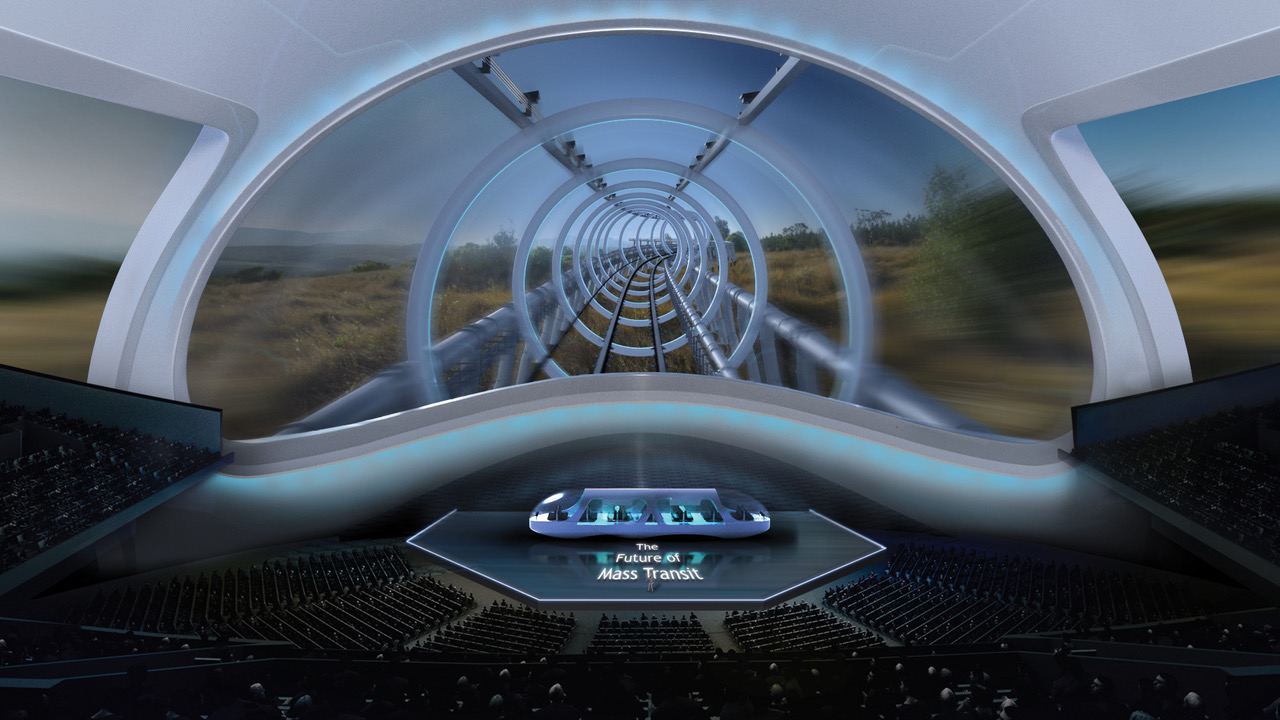

Mega High-Resolution Projects

With 4K UHD and HDR on the technology roadmaps of leading media organisations, advances in display technology are also driving the need for greater fidelity for other applications. A giant 19.3m x 5.4m Crystal LED system with a display resolution of 16K x 4K has been installed at the Shiseido Global Innovation Center in Yokohama, Japan.

High resolution fine pixel point LED displays are also being used within the filmmaking process, to screen backdrop images. Disney/ILM’s stage for The Mandalorian, for example, was a full 360-degree immersive display, 21 ft tall, 75 ft in diameter with a roof made of screens driving many millions of pixels.

“Mega high-resolution projects are being worked on for concert and event venues that need to wow people to justify the ticket prices,” Jan Weigner, Cinegy co-Founder & CTO. “With 8K set to become the home cinema norm, that increases the stakes. 16K will come for sure but 16K for VR is not a whole lot. For many pan and scan AI-driven virtual camera applications then 16K would be a start.”

“The moment when the resolution ceases to matter and we can cover 12K or 16K resolution per eye for VR (which requires 36K or 48K respectively) we are getting somewhere. Give me the pixels, the more the better, the applications will follow. 50-megapixel cameras exist albeit in security not broadcast.”

IP Bottleneck

The concept of format agnosticism was introduced eight years ago as broadcast vendors introduced more IT components into camera gear. The idea was that that the industry’s move to using IP packets for distributing video meant that resolution won’t matter. IP infrastructure would be forever upgradeable and eliminate the need to rip and replace hardware.

Given that many 4K workflows are still routing Quad-Link HD-SDI signals and that 4K UHD over 10GigE links requires compression, progress could be considered slow.

“IP computing technology has advanced in terms of bandwidth, latency and processing capacity run in the cloud however, ever-increasing picture quality brings an enormous increase in volume of data,” says Sykes. “An uncompressed 8K video signal at double the usual frame rate, for example, will use up 32 times more bandwidth than an uncompressed HD signal.

“This bandwidth needs to be accommodated in transport as well as processing, and for live production in particular, this needs to be done properly to avoid impacting latency – that’s a really complex task!”

But the bandwidth challenge doesn’t stop there. As well as higher resolutions, there is now a trend for more camera signals to be used in production (especially in sport). And furthermore, production workflows in the facilities often need to accommodate multiple video formats at the same time (e.g. HD, HD+HDR, 4K+HDR), meaning even greater bandwidth requirements.

“IP WAN links with higher capacity (100GB, 200GB) help, but video signals will very rapidly fill those links – just like road traffic will always increase to fill extra lanes on highways,” Sykes says. “The WAN transport is just the start of the problem: capacity within the production facilities is likely to be an even great challenge.”

In short, planning for scalability in IP media networks is essential otherwise higher production values cannot be accommodated, even with low latency encoding options (like JPEG 2000 ULL or JPEG XS).

“The future of content creation and delivery will continue to be about competition for quality and excellence around a set of common industry standards,” Sykes says. “There is a need however, to balance technical performance against strict commercial requirements, especially in today’s much-changed global media landscape.”