Latency is the big bugbear of live production and is certainly hampering migration to the cloud but perhaps the industry is looking at the problem from the wrong end of the lens. What if latency were embraced and you could perform realtime control in the cloud?

The result would transform live sport into the genuine immersive interactive experience that producers believe it can be. What’s more, the bare bones of the technology to achieve it are already here.

“Once we stop looking at moving current production technologies and workflows to the cloud and instead ask how we reinvent live production it totally transforms how we cover events.”

Peter Wharton, Director, Corporate Strategy, TAG Video Systems

First it requires the industry to shed its allegiance to working with uncompressed media and its fetish for no latency.

“Once we stop looking at moving current production technologies and workflows to the cloud and instead ask how we reinvent live production it totally transforms how we cover events,” argues Peter Wharton, Director, Corporate Strategy, TAG Video Systems.

“If we continue to bring the excess baggage of latency to cloud we bring with us the same workflows which does not leverage what the cloud can do.”

NAB Amplify lays out the case for reinvent linear media workflows in the cloud:

Start from Scratch

Efforts to migrate traditional production workflows to the cloud rely on fundamentally the same technology, familiar features sets and control surfaces unchanged for half a century.

Mindsets have been replicated too. Where there were segregated functions (between camera shading and switching, for example) in an OB so the same processes are being repeated in the move to IP. This approach is coming up short in the next step to cloud.

“We’ve had to adapt from ST 2110 to something it wasn’t designed for,” says Wharton. “2110 is not for the cloud. 2110 exists because we want to maintain the latency of the SDI world at the expense of cost and bandwidth.”

Solutions which don’t work with uncompressed but retain ST 2110’s precision timing are being advocated. Top of the pile is JPEG XS which exhibits subframe latency on the production side while reducing bandwidth to make live over IP more economical over long haul transport.

“Live production in the cloud doesn’t work unless you build it end to end. That’s important because as OTT becomes the dominant force for distribution the whole media ecosystem needs to be in the cloud. You need to co-locate production and distribution systems.”

Peter Wharton, Director, Corporate Strategy, TAG Video Systems

Another solution with potential is Cloud Digital Interface, developed by AWS and being proposed to standards bodies. CDI allows uncompressed video to flow through the AWS Cloud to help guarantee delivery at the other end without introducing latency (as low as 8 milliseconds).

The issue, as far as Wharton sees it, is that these solutions continue to translate traditional SDI workflows.

“Live production in the cloud doesn’t work unless you build it end to end,” he says. “That’s important because as OTT becomes the dominant force for distribution the whole media ecosystem needs to be in the cloud. You need to co-locate production and distribution systems.

“The problem is that we’re always battling latency. For that reason, we can’t move the cameras and the camera-ops to the cloud.”

Volumetric Camera, Virtualized Game

But what if you could? Could the playing field be virtualized just like every other part of the live production machine?

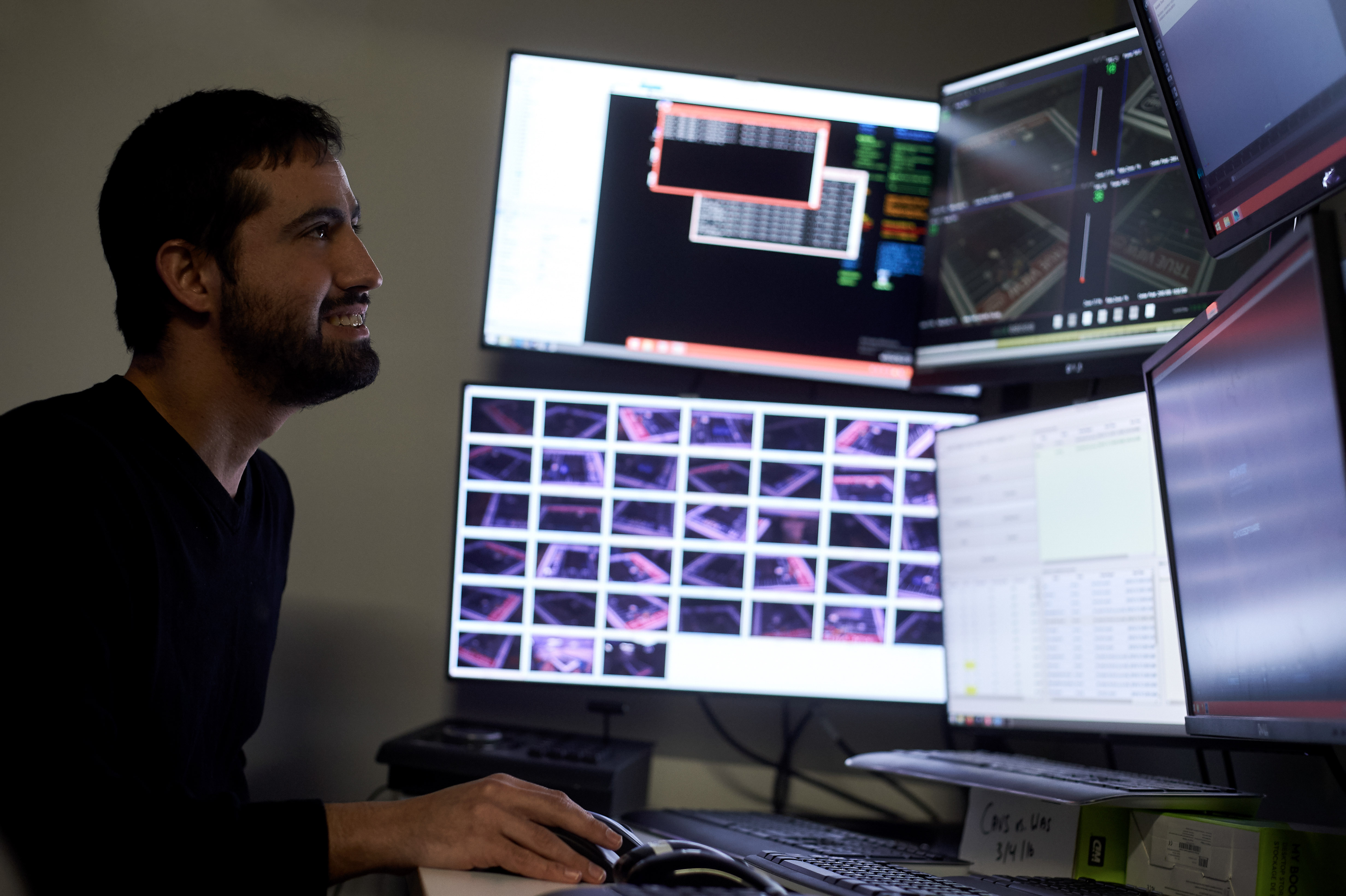

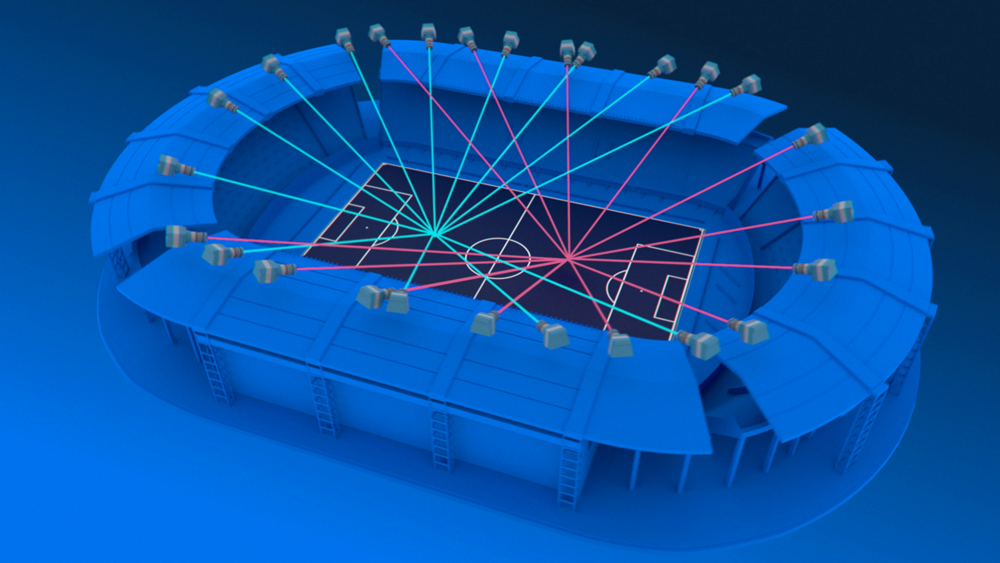

Wharton’s answer is volumetric camera arrays. In this model, stadia are ringed by dozens of cameras on the mezzanine level with all feeds being home run to the cloud. The feeds would be processed to create a point cloud array from which the director can create slice any angle and single view they wanted.

The volumetric camera ops would be sitting in a central production control room not at the venue. Far fewer camera ops are needed — maybe 4-5 as opposed to 32 for a standard English Premier League match; while an infinite number of camera positions are possible.

The multi-camera arrays could be 4K UHD, perhaps linked with Lidar, a time-of-flight sensing technology that determines the distance between camera and object or player on the field.

The shots themselves are synthesized in production similar to the eSports observer camera model. The operators would be controlling a virtual camera to shoot the game.

What’s more, production can use the 4-5 seconds of raw processing time to see the event slightly ahead of time and anticipate shots. Virtual jib cams, sky cams and POV cams could all be driven by AI to pre-program camera moves.

Zero Latency

Most importantly, camera control is now at ‘zero latency’ compared to the virtual representation of the game in the cloud.

“The camera shot is directed at the switcher time not the event time,” says Wharton. “Event to cloud latency is now irrelevant because it is the virtual point cloud we are shooting against. With cameras in the cloud the only latency you’d have to manage — the only latency a director will worry about — is between cloud and operators [the virtual camera].

Pressed on this, Wharton says no distribution model — cable, satellite or OTT — is going to beat someone in a stadium using a cellphone to place bets on the game in front of them.

“You’re looking at 5-10 seconds of latency minimum in almost every distribution model compared to the half second on your phone. The delay we’re talking about [with volumetric production] is two seconds on distribution. If it is any more than that you throw more compute power at it.”

Moves You Can Only Imagine

The volumetric model trades the concept of latency in order to create a far more immersive coverage of a game.

“By recreating the entire playing field using volumetric cameras as a point cloud with an eSport observe camera I could position, pan, control and move any way I want. If you think about the millennial audience who are used to the kind of engaging involvement in an eSports game then the traditional linear sports presentation feels two dimensional compared to the far more immersive perspective of that game that could be created.

“From the volumetric camera array operators can interpolate any camera position you want. I can start with the camera behind a player as they kick the ball and follow the ball’s trajectory before getting right behind the goalkeeper as they save it. You can do camera moves you’d never attempt now.”

For instance, the camera could move from one side of the stadium to the other without making a jump cut which would otherwise confuse viewers.

What’s the Cost?

It all sounds far-fetched since it would still need massive amounts of computer power. Wharton admits to being no cloud computing expert but says he’s done the math.

He estimates that approximately 8000 cores are needed to run 32 UHD cameras, to create the point cloud with sufficient resolution to allow you to zoom in without losing detail.

That’s tens of millions of dollars of servers – if you were to buy them. Renting is another matter.

An entire server of 96 cores can be rented in Amazon for $7.8 an hour (or $0.0815 per core per hour). Plus, it’s all OPEX, not CAPEX.

“Based on the most expensive compute with the most expensive memory and GPUs instances I can rent 8000 cores for $640 an hour. That’s throw away money in live production. It’s less than the travel and entertainment costs for a single camera person.

“While we don’t care about compression latency as long as the images can be synchronized, compression artifacts could stress the computation of the point cloud rendering the same way noise impacts compression. So fairly low compression ratios with very high image fidelity is probably needed. I think this means JPEG-XS with somewhere between 4-8x compression ratios today.”

All this requires stadia to be installed with 100 Gbps ethernet/fibre. Taking Wharton’s model again: 32 UHD cameras x 12Gbps native data rate = 384 Gbps raw data rate. 5:1 compression reduces that to 77 Gbps data rate. Hence the need for 100 Gbps links.

“Most professional stadiums in the US today already have 100 Gbps Internet and dark fibre connections to the outside world,” he says. “There’s no cost for ingest into the cloud, so this is not a factor in the economic analysis.”

He also points out that $640 is list price. “We’ve had this idea for years and wondered how it could be done. Now the question for the industry is who is going to develop the point cloud compute engine and software?”

Intel and Canon

The scenario goes further to giving fans at home the ability to run a personal observer camera on their gaming consoles “for pennies an hour because the compute costs are so low for each camera output [estimated at 1-2 cores per stream].”

Wharton adds, “You could have a 360-game in your own headset and be walking around the field of play — live.”

Clues to how all this will look are already being demonstrated by Intel and Canon. Their respective True View and Free Viewpoint multi-cam arrays are installed at several NFL and EPL stadia including Mercedes-Benz Stadium in Atlanta and Anfield, home of Liverpool FC.

The key difference is that these applications are not realtime.

“Intel use a fairly modest amount of compute power in racks on-prem. The recordings are used for replay not realtime because they have limited computer power. They’re basically throwing 300 cores not 8000 cores at it.”

The volumetric camera model might work for stadium sports but it’s harder (though not impossible) to see how the larger and topographically challenging connectivity of a F1 circuit or golf course would follow suit.

“Two years ago, live was the last remaining step in the holy grail for making cloud work for media ecosystems,” Wharton says. “Today we’re at the early stages of making that work and in two years it will be more than normal.

“US TV groups are already looking at how to migrate their live production control rooms to the cloud whereas until recently they’d be kicked out for even contemplating the idea.”