TL;DR

- VVC is one of a number of video compression technologies in use by the industry as it transitions from HEVC.

- Beyond that, AI is expected to be used to optimize video streaming bandwidth and to enhance existing compression schemes.

- The video industry won’t abandon established and proven methods too early. Instead there will be a mix of both approaches as AI research expands.

READ MORE: The State of Video Codecs: Evolution of Compression in a Video-First Economy (InterDigital)

AI is considered an essential new tool in the progress towards future video compression technologies, but the next few years will be dominated by the transition to existing standards, including AV1 and VVC, according to a new InterDigital and Futuresource Consulting report.

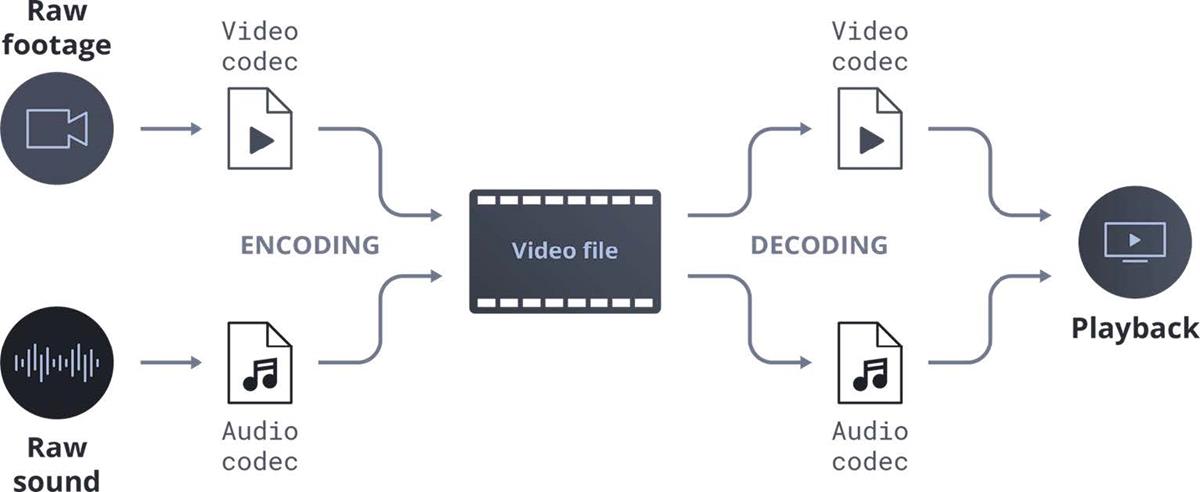

“The Evolution of Compression in a Video-First Economy” outlines the development path of video compression codecs that have proven to be critical in reducing bandwidth and improving efficiency in the delivery of the data dense video services.

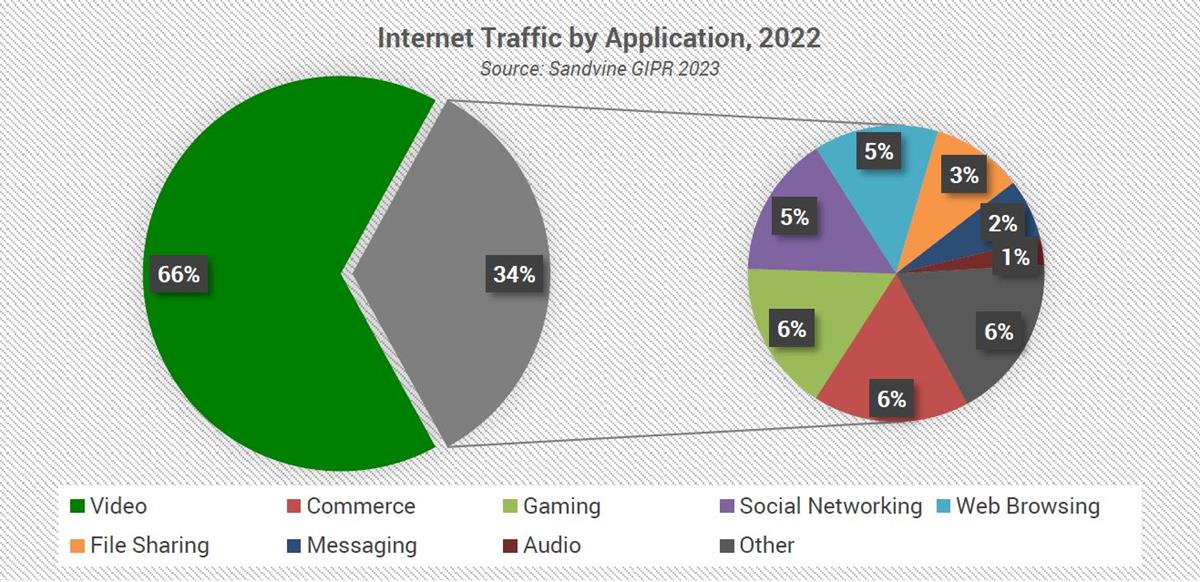

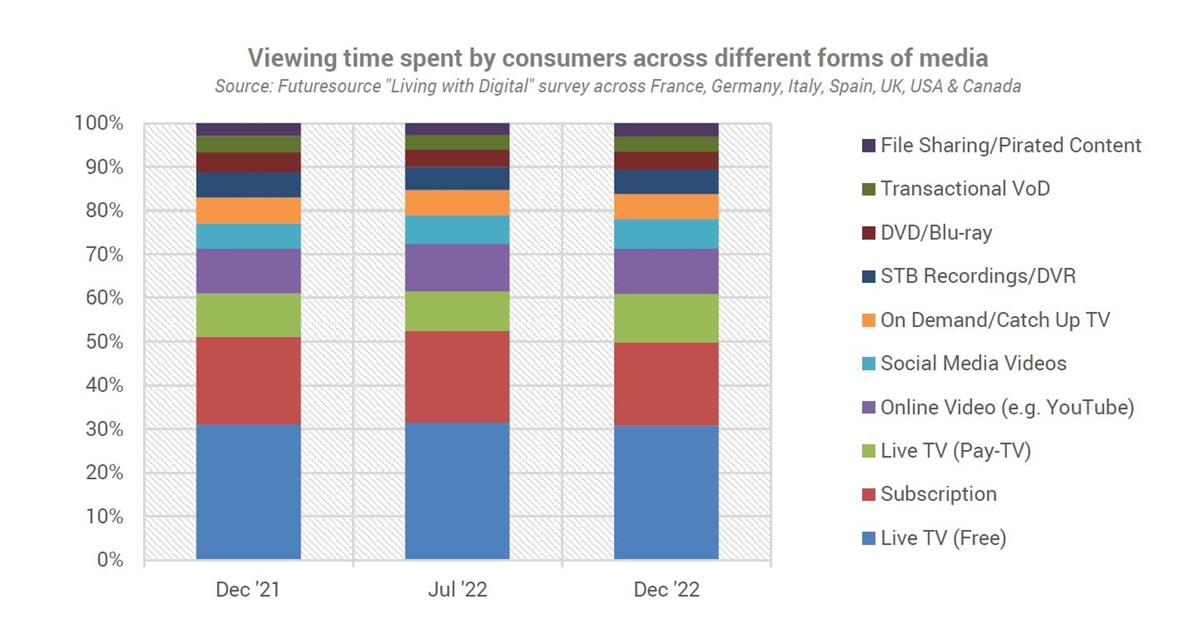

The report restates the case that video dominates internet traffic today, with more than 3.5 billion internet users streaming or downloading some form of video at least once per month and that, with applications for video expanding, state-of-the-art codecs are needed to not only reduce storage and bandwidth but also use energy more sustainably.

Spotlight on VVC

Unsurprisingly given its own stake in the development and licensing, InterDigital makes much of Versatile Video Coding (VVC/H.266) as the standout video codec that will take over much of the work from existing lead standard HEVC.

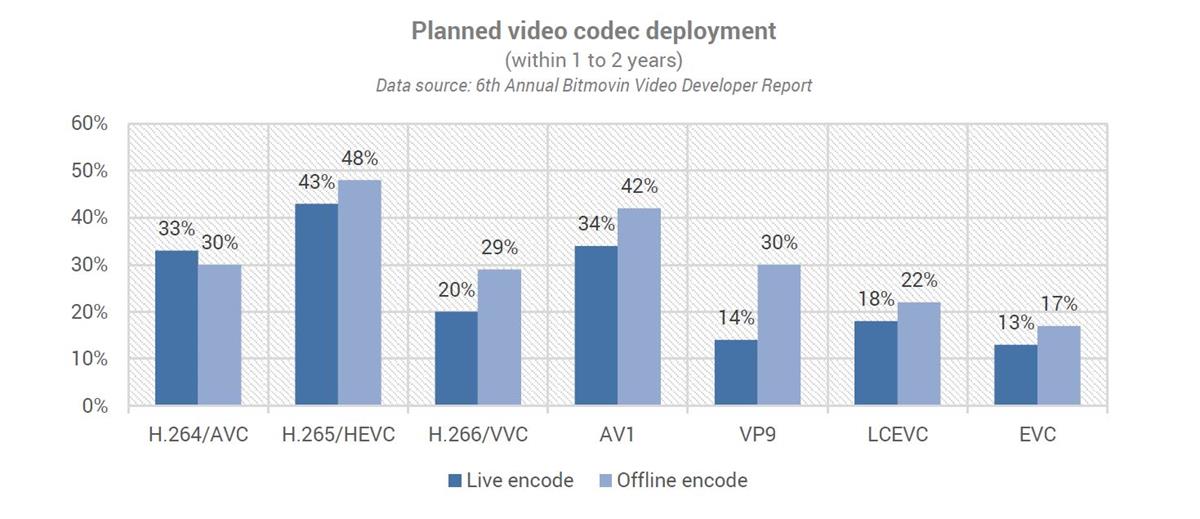

Based on research by codec specialist Bitmovin, H.264/AVC continues to be a popular choice for live and offline encoding, but InterDigital thinks this is likely to be overtaken by both H.265/HEVC and AOM AV1 within the next two years.

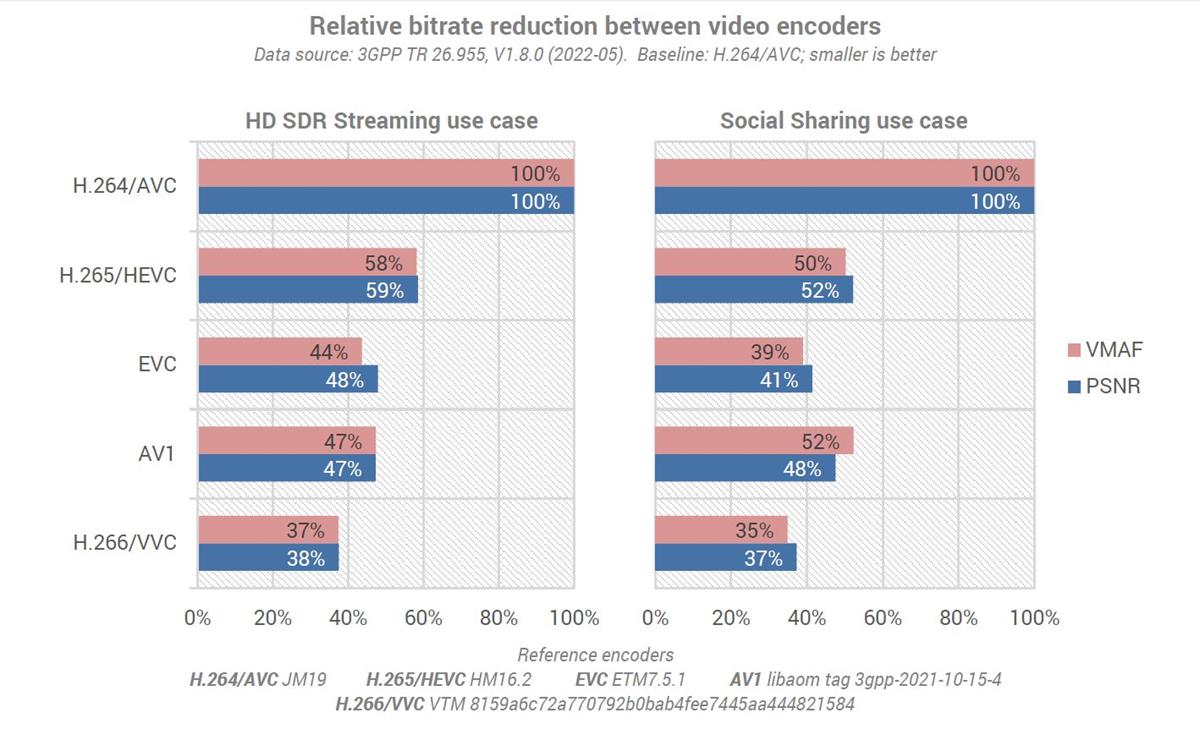

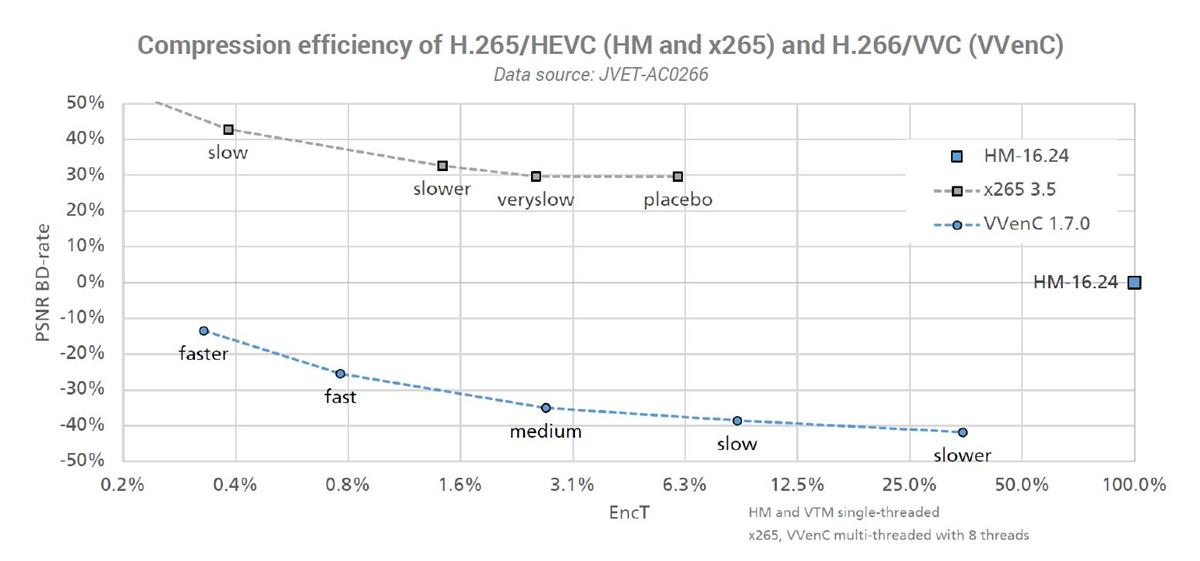

The VVC (H.266) is based on H.265/HEVC and offers up to a 50% bit rate reduction.

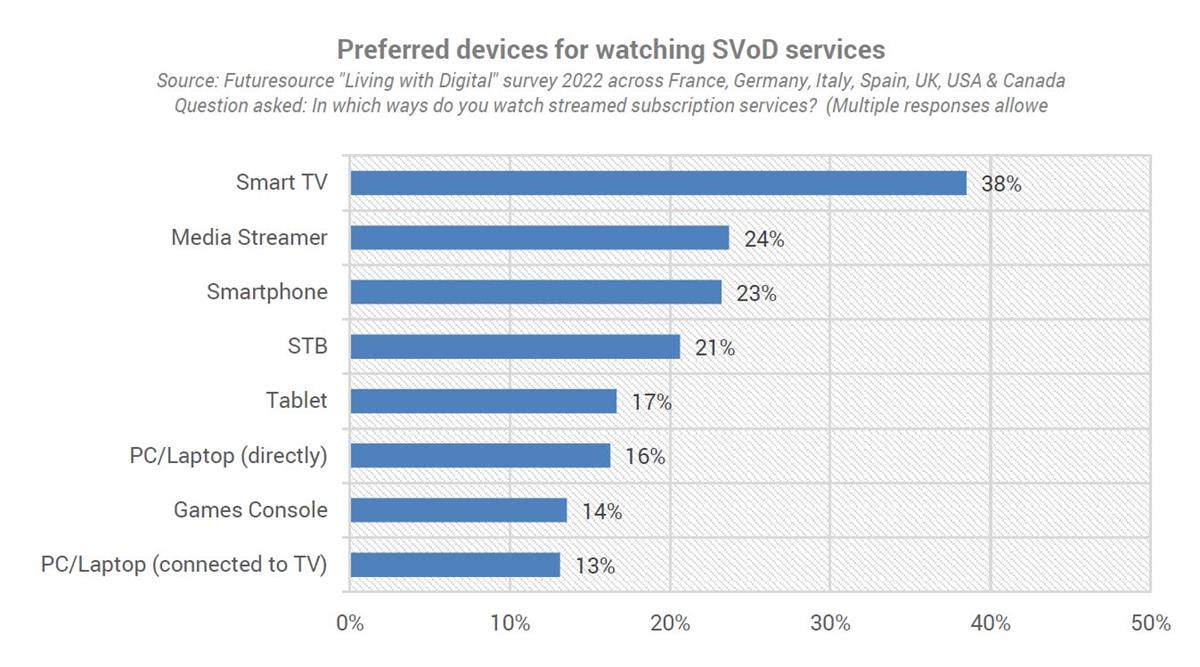

Alongside InterDigital, major semiconductor companies including Qualcomm, Broadcom and MediaTek are among the largest contributors of intellectual property into the VVC standard. They will integrate into Android smartphone chipsets, helping to drive VVC adoption into mobile. More widely, hardware decoders are under development to provide support for VVC on TVs, STBs and PCs.

Chinese technology giants Alibaba and Tencent each have their own versions of VVC codec (S266v2 and TenCent266, respectively).

NHK and Spin Digital are also developing real-time software VVC decoders to support UHD live streaming and broadcast applications.

Therefore, InterDigital believes, VVC is likely to become the favored codec as UHD services proliferate with expectations that it will be universally accepted and used from 2026 onward.

However, it says, “VVC may not replace H.264/AVC and H.265/HEVC entirely, but instead the industry is likely to advocate the coexistence of multiple codecs during the transition.”

Looking further ahead though and codecs like VVC, HEVC and AV1 will likely be superseded by technologies based on neural networks.

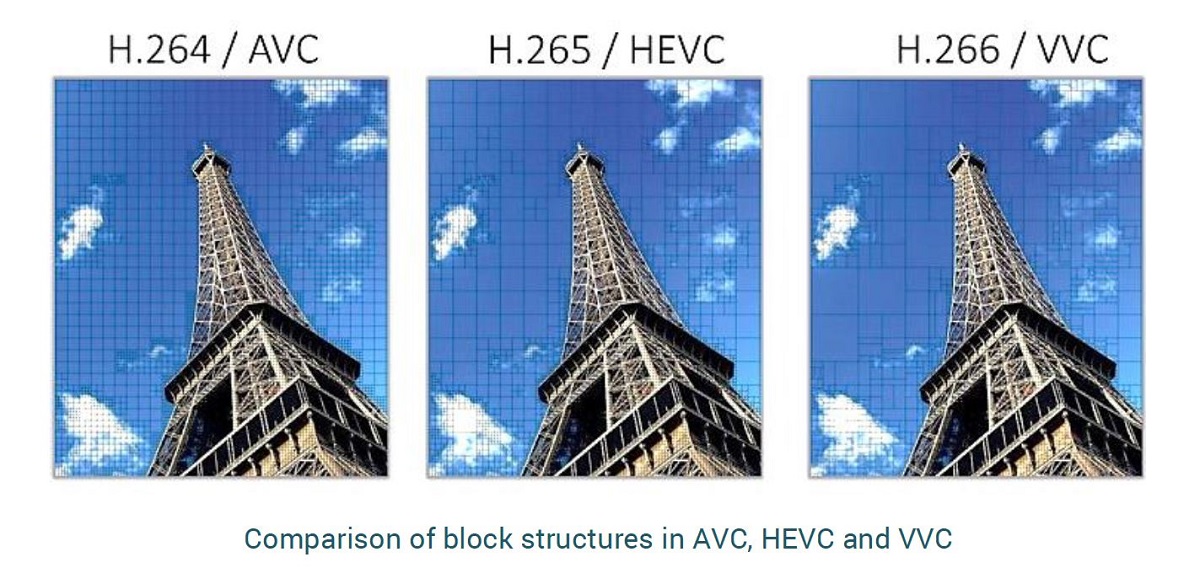

“The days of static, block-based codecs may be coming to an end,” InterDigital notes. “Traditional coding techniques use hard-coded algorithms and, although these are entirely appropriate for saving bandwidth, their advancement is still based on traditional heuristics.

“New coding methods, notably those exploiting the power of AI, are poised to supplant current wisdom within the next five years.”

Enter AI

Machine learning techniques are being researched by the major video standards organizations worldwide. The MPEG JVET Adhoc Group 11 is working on NNVC (Neural Network Video Coding) in an attempt to create an AI-based codec before the end of the decade.

The paper explains that there are three primary areas of focus: dynamic frame rate encoding, dynamic resolution encoding, and layering.

In dynamic frame rate encoding, the AI aims to encode video at the minimum frame rates necessary to encapsulate the content without sacrificing quality. News broadcasts might be encoded at 30fps, whereas live sports content benefits from 60fps.

“Using ML to train AI to identify the type of content, it is possible to significantly reduce the encode compute requirements, approaching a 30% reduction overall for content with limited movement between frames.”

Dynamic resolution encoding extends existing compression techniques that streaming content providers employ today. Here, the resolution-bit-rate choices are determined on a scene-by-scene basis to optimize file storage requirements and streaming bandwidth using encode ladders. Using AI, however, would remove the requirement for encode ladders.

“Replacing this ‘brute force’ approach not only reduces computation, but also helps improve sustainability by banishing unnecessary energy usage,” says InterDigital.

This applies to offline encoding as well. Netflix, for instance, has been using AI to avoid exhaustive encodes of all the parameter combinations, with neural based methods discovering the optimum selection to reduce file sizes.

The third AI focus on layering is aimed at delivering higher-resolution content. Using scalable techniques, UHD videos are encoded using a base layer at HD resolution along with an enhancement layer that conveys the extra data required to reconstruct UHD frames. HD-only receivers ignore the enhancement data, whereas a 4K-capable product uses the additional layer to decode and reconstruct the entire video stream.

AI-derived methods are also likely to extend beyond these traditional techniques. For example, AI could reconstruct video using low-resolution reference images alongside metadata describing the context, “delivering a credible approximation to the original video with a significant reduction in bandwidth.”

While ML and AI have a place in helping define current and future video coding, InterDigital says that the industry isn’t about to drop its existing tools and models.

“The industry concurs that traditional video coding tools presently outperform AI-based alternatives in most areas today,” it states. “There are over 30 years of engineering effort and hundreds of companies involved in perfecting video compression standards; this isn’t easily replicated or supplanted by introducing AI into the discipline.”

For instance, the complexity of neural networks “is often exceptionally high” which leads to a proportional increase in energy usage.

“This leads to the inevitable questions around the scalability of such solutions, and the impact of media on environmental sustainability,” InterDigital says.

There are other challenges with AI-based video development. One of them is measurement. While the video standard is fully described and verified against agreed metrics, “when using AI, it is sometimes difficult to explain exactly how the implementation operates; there must be an understanding on how AI-based algorithms adhere to the specifications and produce standards-compliant bitstreams.”

Part of the work of the JVET AhG11 is to establish clear rules by which AI methods can be assessed and made reproducible.

Then there’s the sheer pace of development in AI, which has resulted in generation of synthetic media. With synthetic media, instead of transmitting an image for every frame using existing compression techniques, systems can use AI to identify key data points describing the features of a person’s face. A compact representation is then sent across the network, where a second AI engine reconstructs the original image from the point data.

Consequently, InterDigital thinks it may become unnecessary to send video frames, and instead systems might describe scenes using metadata.

The next evolution is data-driven synthetic media, created in near-real time and used in place of traditional media that could see hyper-personalized video advertising created and delivered within seconds.

“Cloud and device AI processing capability will undoubtedly need to develop substantially for this to happen at scale,” says InterDigital, “however the future for video coding and transmission certainly seems destined for significant transformation.”