TL;DR

- Video accounts for more than 80% of all internet traffic and live streaming accounts for 60% of downstream internet traffic, making the need to resolve bandwidth challenges more urgent than ever.

- For live streaming applications, the industry is turning to edge computing to help reduce latency and bandwidth costs by bringing processing and storage closer to users.

- In edge computing, cloud providers configure edge servers in last-mile data centers as part of their CDN services, and content providers deliver streams to the edge servers that are closest to the user.

- Edge computing is a distributed, open IT architecture that decentralizes processing power and could potentially reduce the need for large data centers for storage and delivery.

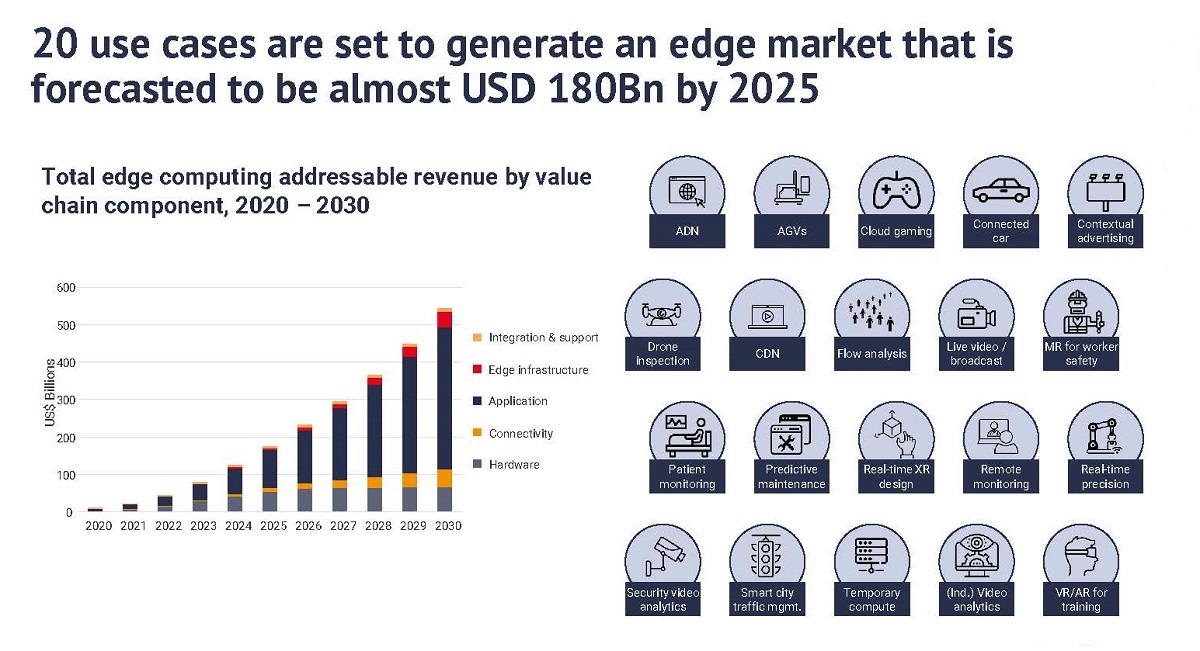

- The forecasted value of the edge market across all industries is expected to be worth $180 billion by 2025.

With video accounting for more than 80% of all internet traffic, bandwidth utilization, escalating bandwidth needs, and energy costs all pose challenges.

For live streaming applications in particular, the industry is turning to edge computing to help reduce latency and bandwidth costs by bringing processing and storage closer to users. And live streaming accounts for 60% of downstream internet traffic.

READ MORE: VNI Complete Forecast Highlights (Cisco)

READ MORE: 68 Live Streaming Stats to Help You Create New Revenue Streams (Influencer Marketing Hub)

If service providers want to meet the increasing consumer expectations to interact with video and to capitalize on fabled next-gen applications like VR, massive multiplayer mobile gaming, and multi-view video, then edge computing will give them a significant, well, edge.

“These [next-gen] applications are delay-intolerant and require real-time response to maintain users’ quality of experience,” Naren Muthiah, who leads strategy and business design functions for Cox Edge, an edge cloud service from Cox Communications, writes in The New Stack. “They are also bandwidth-hungry, resulting in escalating bandwidth costs and energy consumption.”

Having barely gotten used to transferring storage and compute processes outside of their own facilities and into the cloud, the next step for service providers keen to catch revenue from games or ultra-low latency metaverse activations is to work with communications service providers and CDNs at the network edge.

With edge computing, cloud providers configure edge servers in last-mile data centers as part of their CDN services. Content providers deliver streams to the edge servers that are closest to the user.

“Since the edge network is generally fewer hops away from the user, requested views can be streamed with minimal delay from edge servers,” Muthiah explains. “This can reduce latency compared to streaming directly from the content provider.”

READ MORE: How Edge Computing Makes Streaming Flawless (The New Stack)

One advantage of edge computing is that providers can offload the generation of different video streams to the edge servers. Such “virtual view” generation can also be adapted to suit bandwidth conditions and resources at the edge or on the client’s device to optimize quality of experience.

Edge compute vendor Videon is naturally confident on the potential of the technology to supercharge interactive content.

“Edge computing for live video streaming enables a host of flexible and reliable cloud computing functions to be brought to the point where video is created,” writes Streaming Media founder and president Todd Erdley. “Placing this capability as close to the video source as possible simplifies live video workflows, reduces latency, enables faster deployment of standardized protocols across networks of devices, and eliminates unnecessary operational costs.”

Erdley believes edge computing empowers live video broadcasters and content creators to continue innovating and building the functions and capabilities they need to create customized live video workflows. “Rather than dictating how they should operate, edge computing is an innovation-enabler that helps media companies shape their present and future.”

For example, he says that sports leagues and broadcasters can use edge computing capabilities to get more feeds into their production workflows without having to spend huge amounts on additional encoding equipment while also decreasing operational costs. Using edge computing at the point of video origination in concert with cloud computing “improved quality resulting from needing to encode only once vs. twice with the traditional encoder or cloud workflow.”

By doing more at the edge rather than in the cloud, Erdley claims that certain video use cases can halve OPEX costs and reduce latency from tens of seconds to under 200 milliseconds.

In this scenario, edge compute is complementary to workflows and processes — such as encoding — in the cloud. Taking a hybrid approach by using an edge computing platform to augment the cloud gives end-users, developers, and media companies the freedom and flexibility they require.

READ MORE: How Edge Computing Will Revolutionize Live Video Streaming (Streaming Media)

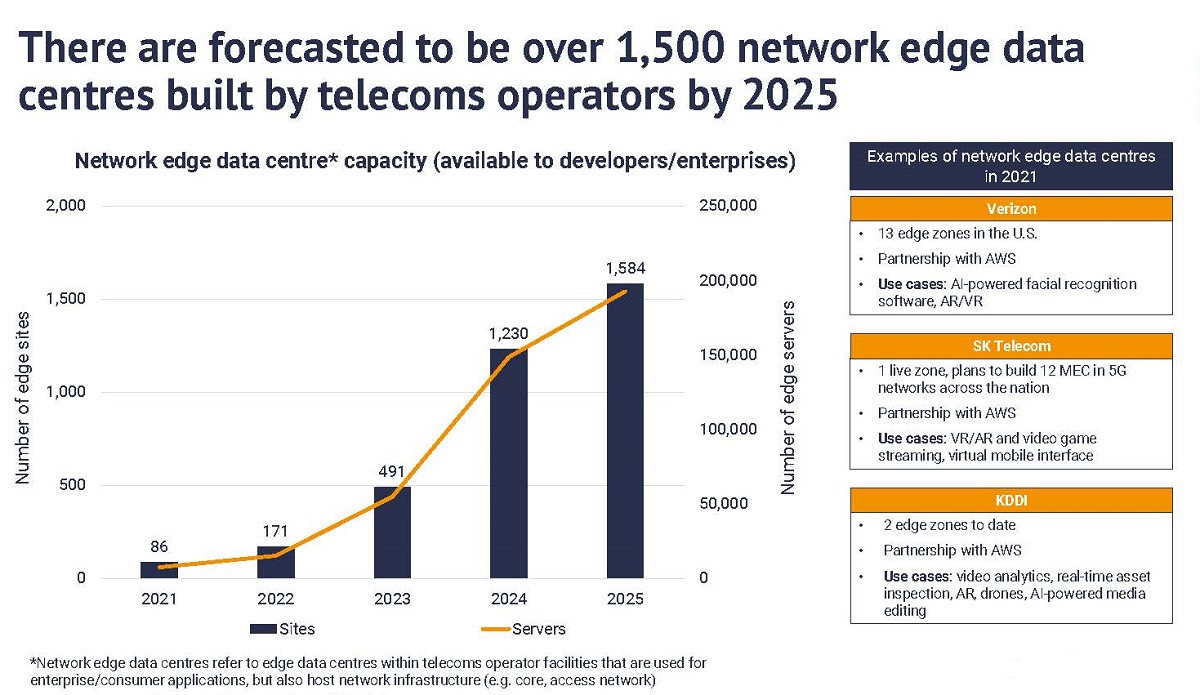

In a report from telecom consultant STL Partners, the forecasted value of the edge market is expected to be worth $180 billion by 2025 across all industries. The company also reckons there will be more than 1,500 network edge data centers built by telecom operators alone by 2025. Non-telcos, including CDNs like Akamai and Proximity Data Centers based in Oxford, UK, are also building out edge services. Demand is being driven by the main (hyperscale) cloud providers — Microsoft, Amazon, Google and Alibaba.

“Edge computing essentially allows companies to access the benefits of cloud closer to the end-user,” the report states. “The possibility of various business models when monetizing edge computing is attractive.”

READ MORE: Edge computing market overview (STL Partners)

Will the Edge Really Get to the Network Edge?

Data analyst firm IDC defines the edge as “the multiform space between physical endpoints… and the core,” with the core defined as the “backend sitting in cloud locations or traditional datacenters.”

HP Enterprise says edge computing is “a distributed, open IT architecture” that decentralizes processing power. It says edge computing requires that “data is processed by the device itself or by a local computer or server, rather than being transmitted to a data center.”

Both definitions say that edge computing isn’t meant to occur at the data center, prompting the question of whether large data centers will continue to be the go-to model for both storage and delivery.

Based on responses to a recent State of Streaming survey produced by Streaming Media, the answer will involve a mix of small regional data centers focused on smaller towns and cities alongside the behemoth data centers located near major metropolitan areas.

According to the survey, industry respondents overwhelmingly (65%) plan to adopt an approach to smaller, regional data centers for their edge strategies.

READ MORE: State of Streaming Spring 2022 Report Launches (Streaming Media)

Tim Siglin, founding director of the not-for-profit Help Me Stream Research Foundation, points out that the digital divide — those who can access fast broadband in rural or poorer communities — was thrown into stark focus during the pandemic.

“In rural areas local homes might have plenty of Wi-Fi and maybe even decent downlink connectivity to allow viewing of cached on-demand content, but almost no ability to backhaul (meaning limited ability to participate in Zoom classes, business meetings, or FaceTime with relatives),” he says.

He provides figures suggesting that this is a massively under-served market, so, even if there’s no political or moral motivation to bring the edge closer to home, there is a compelling commercial argument.

“According to World Bank estimates, on a global scale, approximately 44% of people live in remote or rural locations. While each of the individual pockets of connectivity is small, and the backhaul for live-event streaming from these rural areas may be expensive, in aggregate, this is not a small population that can be ignored from either a societal or economic standpoint.”

Siglin recruits Steve Miller-Jones, VP of product strategy at Netskrt, a company interested in getting content to the far reaches of the internet, which includes not just remote or rural locations but also moving targets such as airplanes, buses and trains.

“If we come back to how we think about capacity planning for large events or capacity planning going out five or 10 years in the future,” says Miller-Jones, “the statistical relevance sphere is about large populations that are well-connected. But there’s 44% of the world that, from a single-event standpoint, may not be significant, but it is relevant to our content provider and their access to audience, their increase of subscribers, churn rate, and even revenue.”

Trains have sizable captive audiences to access. On UK domestic services alone, with 3,500 trains and an average journey that lasts just under two hours, that’s significant access to nearly two billion passenger trips annually on the domestic rail, says Siglin (well, in theory, when those trains run and there aren’t strikes, Tim).

“So, it makes sense why solving the challenge of edge computing in this scenario means access to a significant and previously untapped audience that’s probably more inclined to consume content as they hurtle across the countryside.”

Data centers are shrinking with local smaller data centers springing up in towns. The edge can also be in mini-data centers in the mobile network — combining the benefits of edge with 5G.

READ MORE: Why Edge Computing Matters in Streaming (Streaming Media)

Edge Concept 101

To explain the concept of edge compute, let’s start with a 10,000-foot view using an analogy from Videon. Consider the first generation of mobile phones. The ability to make calls while moving or away from a fixed location was a significant improvement over the landline. Yet, the use case was centered around voice conversations — and later simple text messages. Landlines were technologically more advanced devices — but all the functions were built into the hardware by the manufacturer. If you wanted more or different features, you had to buy another phone.

Now compare that to the first smartphones. Although the initial use case was very similar, the difference was the ability to use applications. It’s true that the first applications smartphones shipped with were voice and text. But with a simple download from an app store, the smartphone became a mapping tool, web browser, taxi booking service, baby monitor — and today, a plethora of other different use cases. Voice calls and texting were just an application — rather than the sole purpose of the device.

This is the central tenant of edge computing for live video. Instead of having a fixed device that does just one video processing task, you can deploy a device that can do anything you program it to do based on its available processing power, storage and software applications.

Place this capability as close to the video source as possible to reduce transit cost and latency. If a workflow needs to change, then adapt the software rather than replace the device. To help mass adoption, just like the smartphone, it needs to be a simple and reliable “appliance” rather than a traditional PC to avoid managing the complex layers of disparate hardware, drivers, operating systems and peripherals.

OUR HEADS ARE IN THE CLOUD:

The cloud is foundational to the future of M&E, so it’s crucial to understand how to leverage it for all kinds of applications. Whether you’re a creative working in production or a systems engineer designing a content library, cloud solutions will change your work life. Check out these cloud-focused insights hand-picked from the NAB Amplify archives:

- Get Onto My Cloud: Why Remote Production Tools Are Used by 90% of Video Professionals

- TV Production in the Cloud: The Whys and Hows

- SaaS, IaaS, PaaS: Cloud Computing Class Is in Session

- Take a Tour of the Global Cloud Ecosystem

- Choosing Between Cloud-Native and Hybrid Storage (Spoiler: You May Not Need To)