TL;DR

- Many machine learning researchers are worried about really serious, truly bad outcomes for humanity stemming from AI. Bad actors could seize on large language models to engineer falsehoods at unprecedented scale.

- By talking so much about the technology of AI are we ignoring the business models — good and bad — that will power it?

- There are calls for a debate about what AI should be developed for, but with the genie out of the bottle, it could be that any attempt to brake development is already futile.

READ MORE: Why Are We Letting the AI Crisis Just Happen? (The Atlantic)

After a period lasting about a year when all things AI were generally considered a benign boost to human potential (the worst that could happen was you could lose your job to ChatGPT), the dystopian winds have blown back in.

A number of commentators are calling for a brake on AI development while the risks of its use are assessed — an unlikely scenario since there appears no appetite for it among governments, legislators, big tech, or the AI coding community itself.

With tech companies rushing out AI models without any apparent safeguards (like, for accuracy), cognitive scientist Gary Marcus thinks this is a moment of immense peril. In The Atlantic he writes, “The potential scale of this problem is cause for worry. We can only begin to imagine what state-sponsored troll farms with large budgets and customized large language models of their own might accomplish.

“Bad actors could easily use these tools, or tools like them, to generate harmful misinformation, at unprecedented and enormous scale.”

READ MORE: Microsoft’s Bing AI demo called out for several errors (CNN)

He quotes Renée DiResta, the research manager of the Stanford Internet Observatory, warning (in 2020) that the “supply of misinformation will soon be infinite.”

Marcus says that moment has arrived.

“Each day is bringing us a little bit closer to a kind of information-sphere disaster,” he warns, “in which bad actors weaponize large language models, distributing their ill-gotten gains through armies of ever more sophisticated bots.”

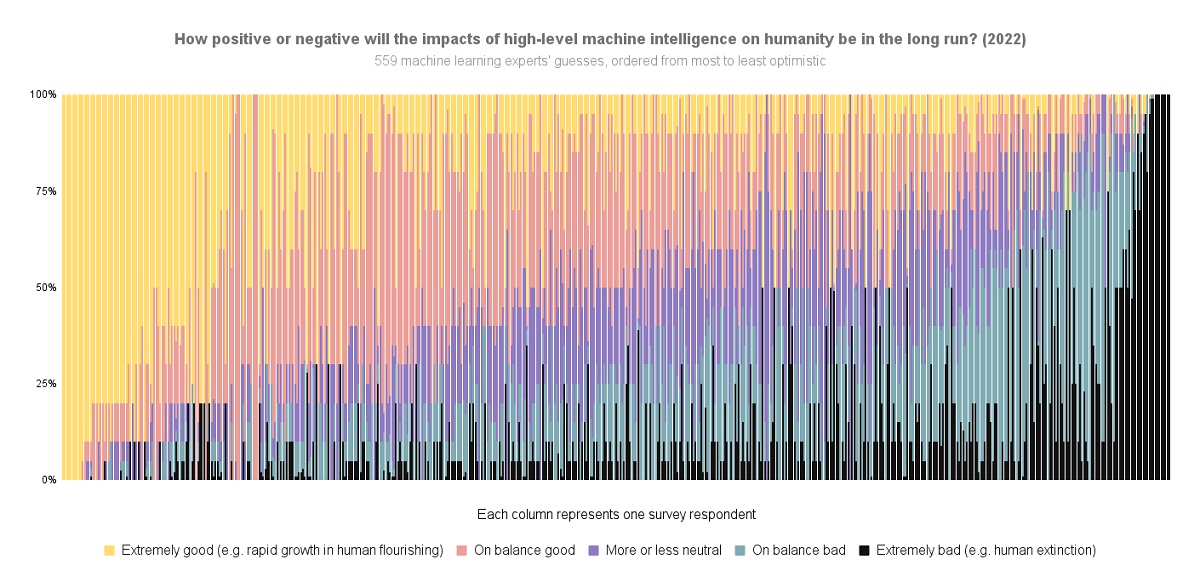

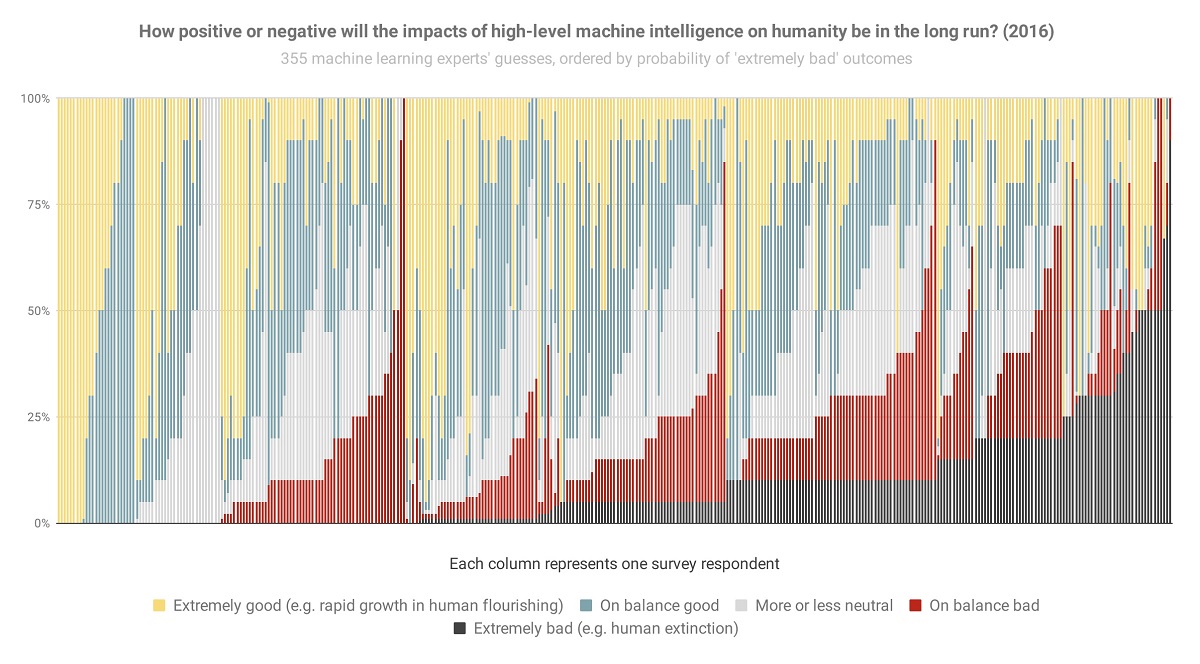

His comments coincide with the results of a annual survey of machine learning researchers called AI Impacts. In its latest report, 14% of participants said they expected AI outcomes to be ‘extremely bad’ and nearly a third (31%) think AI will, on the whole, make the world markedly worse.

READ MORE: How bad a future do ML researchers expect? (AI Impacts)

Just to be clear, AI Impacts chief researcher Katja Grace, says that they are talking about human level extinction.

She added, “The most notable change to me is the new big black bar of doom: people who think extremely bad outcomes are at least 50% have gone from 3% of the population to 9% in six years.

In another op-ed, The New York Times columnist Ezra Klein expresses dismay at what he sees as the lack of urgency among AI developers to do anything about the Frankenstein they are unleashing.

He says the people working on AI in the Bay Area inhabit “a community that is living with an altered sense of time and consequence. They are creating a power that they do not understand at a pace they often cannot believe.

“We do not understand these systems, and it’s not clear we even can,” he continues. “That is perhaps the weirdest thing about what we are building: The ‘thinking’ for lack of a better word, is utterly inhuman, but we have trained it to present as deeply human. And the more inhuman the systems get — the more billions of connections they draw and layers and parameters and nodes and computing power they acquire — the more human they seem to us.”

Klein seems less concerned about machine-led mass human extinction than he is about having even more of his data — and therefore media consumption — turned into advertising dollars by Microsoft, Google and Meta.

Since these tech giants hold the keys to the code he thinks they will eventually “patch the system so it serves their interests.”

His wider point though is to warn that we are so stuck on asking what the technology can do that we are missing the more important questions: How will it be used? And who will decide?

“Somehow, society is going to have to figure out what it’s comfortable having AI doing, and what AI should not be permitted to try, before it is too late to make those decisions.”

READ MORE: This Changes Everything (The New York Times)

Marcus offers some remedies. Watermarking is one idea to track content produced by large language models but acknowledges this is likely insufficient

“The trouble is that bad actors could simply use alternative large language models to create whatever they want, without watermarks.”

A second approach is to penalize misinformation when it is produced at large scale.

“Currently, most people are free to lie most of the time without consequence,” Marcus says. “We may need new laws to address such scenarios.”

A third approach would be to build a new form of AI that can detect misinformation, rather than simply generate it. Even in a system like Bing’s, where information is sourced from the web, mistruths can emerge once the data are fed through the machine.

“Validating the output of large language models will require developing new approaches to AI that center reasoning and knowledge, ideas that were once popular but are currently out of fashion.”

Both Marcus and Klein express alarm at the lack of urgency to deal with these issues. Bearing in mind that the 2024 Presidential elections will be upon us soon, Marcus warns they “could be unlike anything we have seen before.”

Klein thinks time is rapidly running out to do anything at all.

“The major tech companies are in a race for AI dominance. The US and China are in a race for AI dominance. Money is gushing toward companies with AI expertise. To suggest we go slower, or even stop entirely, has come to seem childish. If one company slows down, another will speed up. If one country hits pause, the others will push harder. Fatalism becomes the handmaiden of inevitability, and inevitability becomes the justification for acceleration.”

One of two things must happen, he suggests, and Marcus agrees: “Humanity needs to accelerate its adaptation to these technologies or a collective, enforceable decision must be made to slow the development of these technologies. Even doing both may not be enough.”

Build the Perfect NAB Show Experience.

Add the Amplify+ VIP package to your NAB Show registration for only $99 and gain access to an exclusive community online and at the Show. Plus, you’ll enjoy year-round networking, education, content and Show perks so you’re always in the know at and between Shows.

For $99, you’ll gain access to expedited VIP entry at the Main Stage theater, access to the NAB Amplify+ VIP Lounge and Wi-Fi Hot Spot located in Central Hall lobby, and an exclusive invite to the NAB Amplify+ VIP Party on Monday, April 17 at Aria Jewel with expedited VIP entry starting at 10:30 p.m.

Beginning May 2, you’ll also find a full article in your email inbox each Tuesday offering exclusive NAB Show conversations, sessions and recaps. You’ll also be granted VIP perks when you register for NAB Show New York, October 25 – 26, 2023.

Sign up, start exploring, and Stay Amped. We’ll see YOU in Las Vegas!

EXPLORING ARTIFICIAL INTELLIGENCE:

With nearly half of all media and media tech companies incorporating artificial intelligence into their operations or product lines, AI and machine learning tools are rapidly transforming content creation, delivery and consumption. Find out what you need to know with these essential insights curated from the NAB Amplify archives:

- AI Is Going Hard and It’s Going to Change Everything

- Thinking About AI (While AI Is Thinking About Everything)

- If AI Ethics Are So Important, Why Aren’t We Talking About Them?

- Superhumachine: The Debate at the Center of Deep Learning

- Deepfake AI: Broadcast Applications and Implications