AI is being greeted with equal amounts enthusiasm and skepticism within media and entertainment. It can change the economics, but what does that mean for you?

Jeff Bezos called it in 2017: Artificial intelligence is “a renaissance, a golden age,” Amazon’s CEO said. This year, Google CEO Sundar Pichai said that AI is “more profound than fire or electricity.” In October, Nvidia CEO Jensen Huang declared the age of AI had begun: “AI is the most powerful technology for of our time.”

Computing is the technology of automation. Apple, Microsoft and the other tech giants believe software algorithms, the abundance of data and the powerful computation of GPUs have come together in a big bang of modern AI.

When it comes to media, AI/ML is broadly welcomed if it can transform the economics of production. For example, AI/ML research promises to deliver the next wave of video compression technologies to cut the bitrate cost of transporting data. Algorithms can be used to predict demand to adjust resources or to predict disruptions in the content supply chain which could bring sizable savings.

“AI is the automation of automation.”

JENSEN HUANG, CEO, NVIDIA

Dr. Danny Lange, SVP of AI/ML, Unity is in no doubt: “What sets AI apart from traditional human constructed software tools is that it learns its skill and capabilities from massive amounts of data. It also creates a virtuous feedback loop with its users so that it keeps learning and becomes more capable over time. Not only does the individual creator become more productive they also become more creative as the AI accelerates creativity itself. If you, as a creator, can pursue more branches in the creative process at a much faster pace than with traditional tools you are bound to deliver higher quality content.”

In Hollywood, there’s both tremendous appetite and skepticism about using machine intelligence in generally, and especially in the production process, according to Yves Bergquist, Founder and CEO at AI developer Corto and Program Director, AI and Neuroscience in Media at the USC’s Entertainment Technology Center (ETC). “The technical community in Hollywood is extremely well informed, extremely dedicated to the final creative product, and extremely resistant to hype.”

Here, we look inside the machine:

AI in Postproduction

Media companies faced with a deluge of data are drinking from a fire hydrant. The practical implications of the growing global content economy, in which more and more content is being produced across more platforms, has vendors turning to AI/ML tools to help their customers manage.

Adobe is developing Sensei within its Creative Cloud suite to automate mundane and time-consuming tasks, which allow creatives to spend more time on inspiration and design. For instance, speech to text in Premiere Pro enables users to automatically create a transcript from their video, then generate captions on the timeline. Roto Brush 2 tracks selected objects, frame by frame, automating the time-consuming process of manual rotoscoping. Exploratory technologies from Adobe’s researchers include Project On the Beat, which identifies out-of-rhythm body movements and aligns them to generate beat-synced videos.

“Adobe Sensei is blending the art of human creativity with the science of data,” explains Bill Roberts, Industry Strategist at Adobe. “What we are not doing is creating features and functions that are ‘black boxes’ that the user can’t peek into. We are very committed to maintaining artistic integrity and creative control in every iteration of a Sensei-powered tool.”

“There is tremendous value in leveraging AI in editing workflows to help humans get over creative roadblocks. This is because AI can be a completely dispassionate voice that can ingest your media and offer suggestions. For example, if you’re editing a documentary and can’t figure out how to pair audio and video in a specific section, the AI can understand what your voice over is saying and what the sync sound clip is saying, and then suggest footage in your project that can provide coverage and amplify the inferred meaning. Humans tend to ‘get stuck’ looking at the same content again and again, and a fresh suggestion can often be just the right thing to nudge the creative journey in a new direction.”

BILL ROBERTS, INDUSTRY STRATEGIST, ADOBE

Adobe see AI acting as a “virtual creative muse” for users to expedite elements of the creative workflow – such as automatically reframing and reformatting video content for different aspect ratios.

“AI is evolving to become one’s muse, mentor, or assistant — working at the goal or intent level — to help realize one’s creative vision faster and more efficiently,” explains Roberts.

“There is tremendous value in leveraging AI in editing workflows to help humans get over creative roadblocks. This is because AI can be a completely dispassionate voice that can ingest your media and offer suggestions. For example, if you’re editing a documentary and can’t figure out how to pair audio and video in a specific section, the AI can understand what your voice over is saying and what the sync sound clip is saying, and then suggest footage in your project that can provide coverage and amplify the inferred meaning. Humans tend to ‘get stuck’ looking at the same content again and again, and a fresh suggestion can often be just the right thing to nudge the creative journey in a new direction.”

Other tools like Content Aware Fill in After Effects remove pesky distractions like boom mics or out-of-place signs in a shot with a few clicks. An auto reframe tool is claimed to slash hours off the time to publish content.

“The value of leveraging AI and ML in post-production becomes undeniable,” says Roberts. “We firmly believe that AI done right will amplify — not replace — human creativity and that it holds immense economic value.”

Unity is also building AI/ML capabilities directly into its editor tools and, over time, expects those to blend together and offer a fully collaborative experience.

“AI is really good at collecting and learning from large amounts of input which is something that is useful for it to become a more intimate collaborator,” he explains. “A collaborative AI tool would make suggestions based on history, context, objectives, and trends — perhaps even provoke the creator with deliberately conflicting suggestions to spur the creative process.”

Today Unity MARS enables creators to build AR apps that are context-aware and responsive to physical space. The Unity Simulation service allows creators to generate and test millions of scenarios to extend the horizon of workable designs.

Lange says, “It is Unity’s mission to equip creators everywhere with the power of AI to all aspects of assisted content authoring to reduce the complexity of creating the best real-time 3D experiences in the world.”

AI Scripts

If postproduction and distribution are the areas where ML is most applied today, the studios are taking a very serious look at optimizing the production process, because this is where the biggest costs are.

“Studios are also very dedicated to the creative process, and they want to make sure optimization doesn’t get in the way of creatives,” Bergquist stresses. “There are a lot of complexities around generating clean and structured data throughout production, simply because of the inherent complexities around the production process, and the hundreds of different players involved (most of which don’t have data at the heart of their concerns).”

EXPLORING ARTIFICIAL INTELLIGENCE:

With nearly half of all media and media tech companies incorporating Artificial Intelligence into their operations or product lines, AI and machine learning tools are rapidly transforming content creation, delivery and consumption. Find out what you need to know with these essential insights curated from the NAB Amplify archives:

- This Will Be Your 2032: Quantum Sensors, AI With Feeling, and Life Beyond Glass

- Learn How Data, AI and Automation Will Shape Your Future

- Where Are We With AI and ML in M&E?

- How Creativity and Data Are a Match Made in Hollywood/Heaven

- How to Process the Difference Between AI and Machine Learning

SMPTE and the ETC are in the early stages of standardizing this process. “Ethics are an obvious place, but we haven’t dived into it enough,” he says.

The most controversial issue is whether AI can or should be a content creator or creative partner in its own right. Bergquist says that AI is nowhere near ready to write a script that can be produced into an even mediocre piece of marketable content.

The launch earlier this year of OpenAI’s GPT-3, a language model that uses deep learning to generate human-like text, has raised many questions in the creative community.

“Language models like GPT-3 are extremely impressive, and there’s a place for them in automating simple content creation (like blog posts), but there will need to be a real cultural shift in how the entire AI field thinks about intelligence for [AI generated scripts] to happen.”

Bergquist thinks the entire AI field needs to reset the way it approaches “symbolic representation” if it is to develop machines capable of manipulating narrative domains and symbols on the level of humans.

ETC is building machine-driven ‘representations’ of narrative, as a step to developing a machine language for analyzing and understanding media content.

“We’ve done a lot of research and experimentation around extracting structured metrics such as narrative domains, expressed symbols, and emotional journeys of characters in scripts, and that’s already being used by some studios to evaluate and package creative work,” he reveals.

“What sets AI apart from traditional human constructed software tools is that it learns its skill and capabilities from massive amounts of data. It also creates a virtuous feedback loop with its users so that it keeps learning and becomes more capable over time. Not only does the individual creator become more productive they also become more creative as the AI accelerates creativity itself. If you, as a creator, can pursue more branches in the creative process at a much faster pace than with traditional tools you are bound to deliver higher quality content.”

DR. DANNY LANGE, SVP OF AI/ML, UNITY

“But we’re not telling the creative what to say or write or shoot. We’re just providing context to how that creative work exists alongside other creative works, and seeing how audiences may vibe — or not — with it. This is based, for example, on how unique and interesting it is (ETC have developed a rough mathematical representation of ‘interestingness’). And by the way we’re doing this in collaboration with creatives, because we want to make sure whatever we do and develop does not hurt the art in media creation.”

Bergquist does not believe we’ll see films and shows being written by AI “without substantial human rewrites,” but we’re already seeing a lot of AI-driven context being added to the creative process.

“So far the creative community has been mostly positive about it although this is still very controversial and divisive, so we’re moving forward very carefully,” he says, adding, “We’ve been doing a lot of work with the studios on this, but they are very wary of us talking about it.”

To the question “can computers be creative?” Lange says that the real question is whether we care or not about creative works made entirely by computers.

“As humans we are looking for the human emotions and intellect behind a piece of creative content. Computers can be a part of the creative process, enabling the creator to express themselves more efficiently.”

Prior to joining Unity, Lange was the head of machine learning at Uber, and also served as GM of Amazon Machine Learning. He says, “I’ve seen artworks created by computers, but while they may look impressive, I know that they are just an expression of countless pre-existing artworks and not any particularly insightful human state of mind that evokes empathy. We still need the human presence.”

We’re extremely early in applying AI/ML to live broadcast events but news is likely the first area to see it applied at scale. Among experiments is one led by Al Jazeera, RTÉ, Reuters and AP to use AI to aid live content moderation — essentially to separate fake news from fact and ease the burden on over-stretched compliance teams. A next step is to use AI to flag to graphic video images to editorial teams in a fast moving, breaking news environment.

AI in Live Broadcast

The automated sports production market is rocketing and Isreali-based Pixelott is scooping up. It claims that more than 100,000 hours of live sport were streamed last month alone using its system which costs are as little as $40 per game to use.

The bulk of adoption is in U.S college education, where Pixelott is currently outfitting more than 20,000 systems in high schools in partnership with rights holder PlayOn! Sports. The project is being paid for by syndicated content to third party publishers such as Facebook, but primarily through a U$10 monthly fee via PlayOn’s OTT platform. The goal is to produce more than 1 million live event broadcasts per year by 2025.

Pixellot’s solution is based around fixed, unmanned cameras with in-built ball-tracking technology which produce and stream live HD footage at 50-60 fps, explains Yossi Tarablus, Director of Marketing.

“The camera delivers a 4-camera view which is stitched into a panorama to create a look that resembles TV coverage. We manipulate the image for things like light optimization [brightening areas of the field where shadows have been cast, for example]. Using a sport-specific algorithm and computer vision we simulate camera operation and vision mixing to follow the flow of play. We also have graphics and can replay multiple angle shots.”

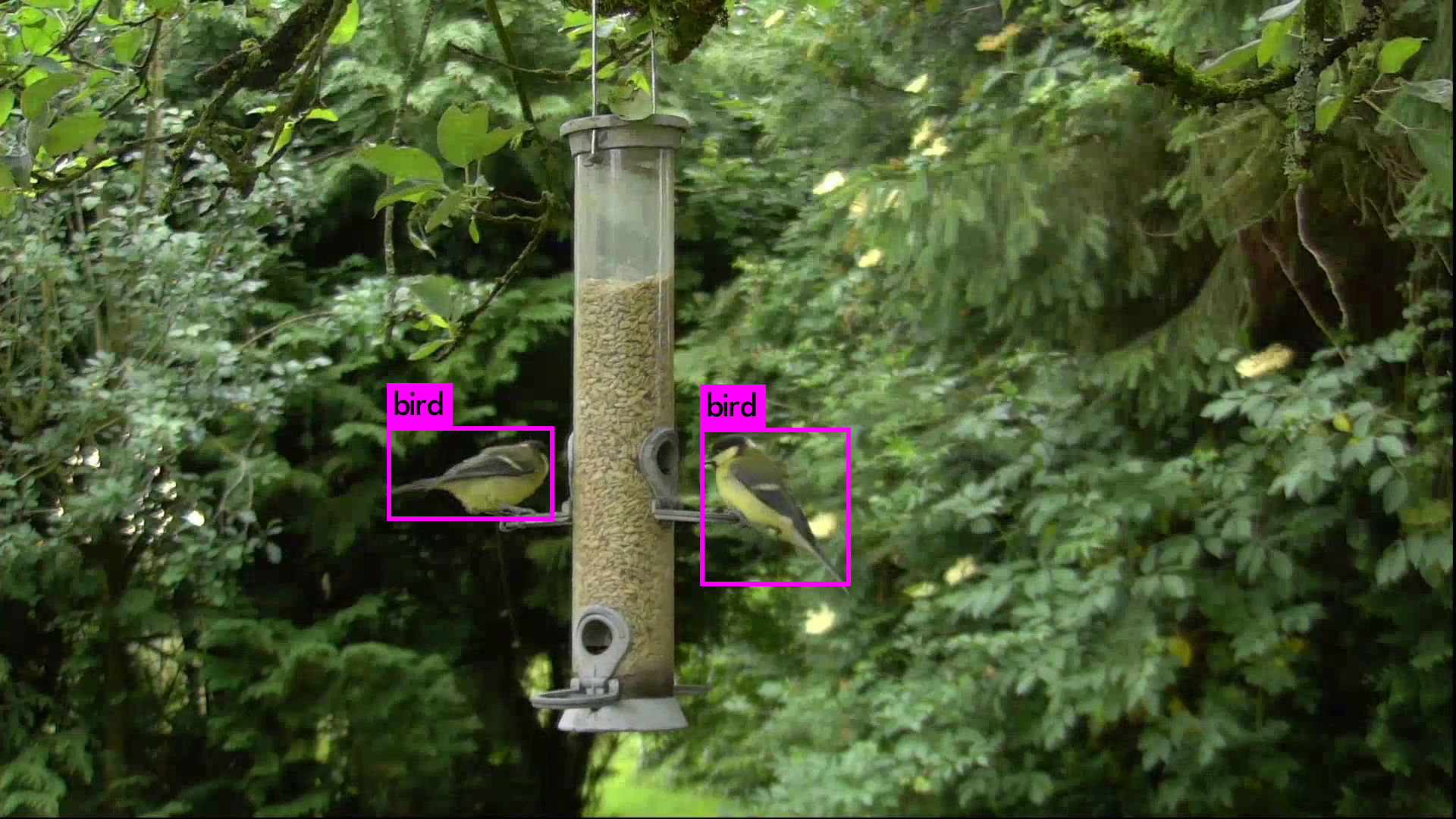

BBC Autumnwatch is a primetime natural history program which aired live in November each weekday for two weeks. It is one of the Corporation’s largest OBs for which BBC R&D has developed an algorithm to automate logging of rushes. The tool, which is based on the YOLO neural network and runs on the Darknet open-source ML framework, is able to detect and locate animals in a scene, using object recognition.

“So far the creative community has been mostly positive about it although this is still very controversial and divisive, so we’re moving forward very carefully.”

Yves Bergquist, Founder and CEO at AI developer Corto and Program Director, AI and Neuroscience in Media at the USC’s Entertainment Technology Center (ETC)

“We’ve trained a network to recognize both birds and mammals, and it can run just fast enough to find and then track animals in real-time on live video,” says senior research engineer Robert Dawes. “We store the data related to the timing and content of the events, and use this as the basis for a timeline that we provide to members of the production team. They can use the timeline to navigate through the activity on a particular camera’s video.”